The increasing use of AI tools, like ChatGPT, in research raises significant questions surrounding research ethics. Learn more about the importance of ethical considerations when using AI in research and the six key challenges researchers may encounter plus real-world examples. Furthermore, practical guidance is provided on how to address these ethical issues.

Disclosure: This post has been sponsored by Editage and and may contain affiliate links, which means I may earn a small commission if you make a purchase using the links below at no additional cost to you. I only recommend products or services that I truly believe can benefit my audience. As always, my opinions are my own.

Contents

The tricky use of AI tools in research

The integration of artificial intelligence (AI) tools in academia has sparked heated debates among researchers. This technological advancement, exemplified by ChatGPT, has created two distinct camps: those who embrace AI wholeheartedly and envision it as the future of academia, and those who are vehemently against it.

Regardless of the camp you may belong to, it is evident that the rise of ChatGPT and similar AI tools will have a profound impact on academic research and education. As several scholars explained in a 2019 publication:

“Artificial Intelligence (AI), or the theory and development of computer systems able to perform tasks normally requiring human intelligence, is widely heralded as an ongoing “revolution” transforming science and society altogether.”

Jobin et al. (2019)

AI may offer valuable opportunities in research when used properly. One of the primary benefits of generative AI is the speed and efficiency it offers. With the ability to process and analyze vast amounts of data in a fraction of the time it would take a human researcher, generative AI can greatly accelerate the research process. This, in turn, can lead to new discoveries and breakthroughs in a variety of fields.

In a way, it has also levelled the playing field between native and non-native speakers of English, thereby aiding the manuscript publication process.

Despite the opportunities of AI in research, it is crucial to acknowledge the potential pitfalls associated with the use of AI in research. One of the primary concerns is around data privacy and security. With the ability to analyze large amounts of data, generative AI systems need access to vast amounts of sensitive information. This can include everything from personal identifying information to medical records, financial data, and more.

To address these concerns, it is important that researchers and academic institutions take steps to ensure that data is stored and handled in a secure manner. This includes implementing strong encryption and security protocols, limiting access to sensitive data, and ensuring that all data is anonymized and de-identified where necessary.

Another challenge associated with generative AI is the potential for bias and discrimination. Because these systems are trained on existing data sets, they can replicate and even amplify existing biases and prejudices. This can have serious consequences, particularly in fields such as medicine and law, where decisions based on biased data can have real-world impacts on people’s lives.

To mitigate the risk of bias and discrimination, it is important that researchers and institutions take steps to ensure that their data sets are diverse and representative. This includes actively seeking out data from underrepresented groups and working to eliminate any biases that may already exist in the data.

Finally, it is important to recognize that generative AI is not a replacement for human researchers. While these systems can greatly accelerate the research process, they are not capable of the kind of nuanced and complex thinking that is required for many research tasks. Therefore, it is important to view generative AI as a tool to be used in conjunction with human expertise, rather than a replacement for it.

You may also like: How to address data privacy and confidentiality concerns of AI in research

Why ethics matter when using AI in research

The use of AI tools in research creates a complex set of challenges that demand careful attention and robust addressing. These challenges are closely tied to research ethics.

According to Siau and Wang (2020, p. 75), “ethics are a system of principles or rules or guidelines that help determine what is good or right.”

Research ethics cover the whole process, from the research design phase to the implementation and dissemination of results.

There are significant risks associated with AI tools in academic research, which include issues like algorithmic bias or data privacy. Neglecting these ethical concerns can undermine the integrity of your research and negatively affect your research career.

Thus, upholding research ethics and standards should be a primary ambition for any serious researcher. It starts with awareness of the key ethical challenges that can emerge when using AI tools during the research process.

6 key ethical challenges when using AI in research

The academic literature on the ethics of AI in research is limited. However, researchers have engaged with the topic of AI ethics in general for a considerable period.

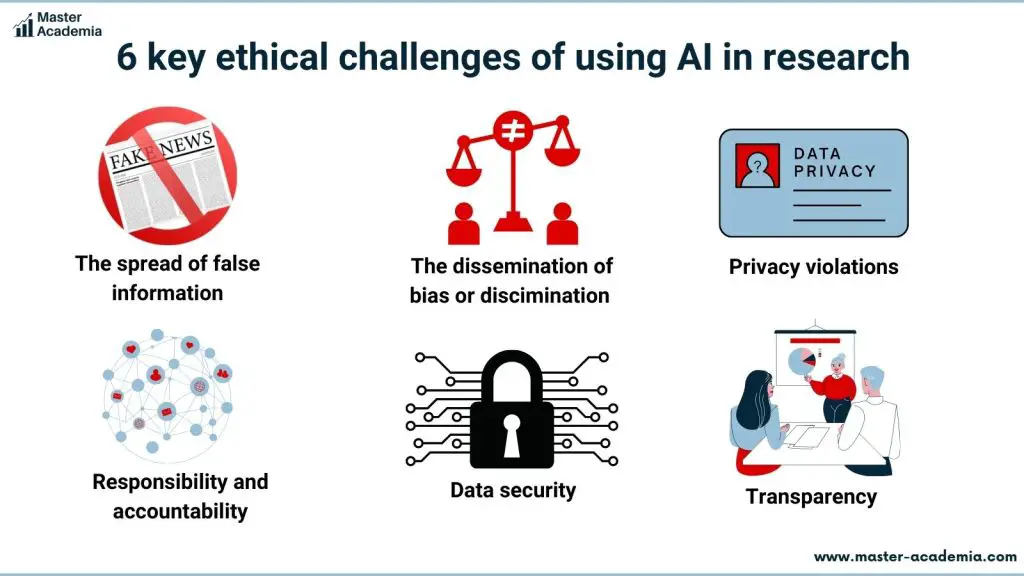

The six key ethical challenges that emerge from the academic literature on AI ethics — with direct repercussions on the use of AI in research — are the spread of false information, responsibility and accountability, the dissemination of bias or discrimination, transparency, privacy violations, and data security.

Here is the full list with explanations:

- The spread of false information: AI tools can provide false information, as they rely on processing existing data. If this data is inaccurate, the tools may also generate inaccurate information. Thus, AI tools are not reliable sources when it comes to factual knowledge. If researchers nonetheless rely on the information generated by AI tools, they run the risk of spreading false information.

- Responsibility and accountability: Determining responsibility and accountability in the context of AI can be difficult. When it comes to using AI in research, however, it seems rather straightforward: As a researcher, it is your responsibility to ensure that you follow ethical standards, and provide accurate information. If not, you should be held accountable.

- The dissemination of bias or discrimination: Not only can AI tools generate false information, but they can also perpetuate human biases with this false information. Think, for instance, about gender or racial biases. This is in direct conflict with the ‘no harm principle’, which is fundamental for ethical research practices.

- Transparency: Researchers who use (sensitive) data of individuals, but insert that information into an AI tool without prior permission of these individuals violate transparency principles. Transparency means that people have the right to fully understand how their data and information is used. Yet, the use of AI tools is often not included in informed consent forms, which is a major ethical issue!

- Privacy violations: Connected to transparency, people have the right to privacy. Privacy violations in the context of AI in research refer to instances where personal data is collected, processed, or shared without the individual’s knowledge, consent, or in violation of privacy regulations.

- Data security: Another key ethical challenge is that of data security. Even if researchers have the permission to insert people’s data into AI tools during the research process, it is difficult for researchers to ensure data security as they are not in control of the AI tool itself.

Examples of ethical dilemmas of using AI in research

Example of the spread of false information as ethical dilemma of using AI in research

When ChatGPT was launched, researchers quickly discovered that ChatGPT creates fake references. This is a clear example how AI tools can spread false information when used for research. In other instances, false information can be generated in a more subtle manner. For instance, when it comes to data or explanations of concepts or phenomena. For anyone who wants more information on this, check the post by Mathew Hillier which explains the inner workings of ChatGPT and how it produces academic content.

Example of responsibility and accountability issues as ethical dilemma of using AI in research

If you rely on AI tools without fact-checking any information (be it information generated, or existing research summarized by AI), you fail to meet your responsibility and are accountable for the consequences. Thus, if you rely for instance on ChatGPT on statistics or explanations for your research paper, and it turns out to be false, you are responsible for the mistake. You can also be held accountable for spreading misinformation.

Example of dissemination of bias or discrimination as ethical dilemma of using AI in research

When AI language models, like ChatGPT, are trained using biased data that unfairly represent specific groups, they can contribute to the reinforcement of social stereotypes and discriminatory outcomes. For instance, if you utilize ChatGPT to analyze data that includes gender or racial markers, the generated analysis may be biased. It may include and accentuate stereotypes and discrimination, not because of your input data, but simply because of the biased online information that is used as training data.

Example of transparency issues as ethical dilemma of using AI in research

Imagine that you conducted in-depth interview as part of your qualitative research design. Your interviewees signed a formed consent form, and you told them that you will ensure their anonymity. However, now you want to use an AI tool to speed up your interview analysis, which requires you to run the transcripts through an AI tool. If you have no explicit permission from your interviewees to do so, but you still make use of an AI tool, you are not fulfilling your transparency obligation!

Example of privacy violation as ethical dilemma of using AI in research

AI tools run the risk of violating privacy. ChatGPT, for instance, records all inputs for which the tool has received backlash from privacy experts. Thus, if you as a researcher provide data and information to AI tools, you run the risk of violating your respondents’ privacy. Some instances have been reported where this information has been shared with advertisers. Some countries even banned ChatGPT due to concerns surrounding GDPR non-compliance, and the fact that ChatGPT would share data with businesses and affiliates.

Example of data security violation as ethical dilemma of using AI in research

Tools like ChatGPT are not foolproof. There have been for instances where ChatGPT users could see conversation histories and private email addresses of others. As a researcher, you do not have any direct power over the data security of the AI tool that you use, which creates huge ethical problems.

How to address ethical issues when using AI in research

First thing is to be clear and reflective about the ethical issues that your research may arise.

Always make sure to get ethics approval from your institution. In three steps:

- Educate yourself about research ethics and AI. Addressing ethical issues begins with awareness of potential pitfalls of using AI in research, and a critical reflection on your own research.

- Get ethics approval from your institution. Before using AI tools for your research, make sure you receive official ethics approval from your university or research body. AI regulations may be context specific, and applying for ethics approval forces you to develop firm solutions to potential ethical challenges that may arise with your intended use of AI in your research.

- Make use of professional human editing service and writing support. As AI is a relatively new field, discussions and regulations surrounding its ethical use are still in their early stages. To navigate the ethical challenges associated with AI tools in research, one effective strategy is to adopt a tried-and-true approach used by researchers for decades: engaging professional editing services. A reputable provider like Editage offers advanced editing services while upholding the highest standards of data security.

FAQs

Can I use AI to write a research paper?

Using AI to write a research paper is certainly possible, but the ethical considerations and potential issues that may arise need careful consideration. AI can generate false and biased information, and data privacy and security may arise when using AI tools. Furthermore, AI detection capabilities are advancing rapidly alongside AI tools. Thus, there is a high chance that people reviewing your research paper will know about your use of AI.

What are the ethical concerns about using AI-generated text in scientific research?

The main ethical concerns surrounding AI-generated texts revolve around false information, the propagation of bias or discrimination, and issues of responsibility and accountability. Additionally, when utilizing AI, there is a risk of privacy violations, compromised data security, and a lack of transparency as you input your scientific research into an AI tool. A safer option is to make use of a professional editing service to improve your writing.

Is it ethical to use ChatGPT in research?

The ethical implications of using ChatGPT in research depend on its usage. Employing ChatGPT for brainstorming or structuring a manuscript may be considered ethical. However, relying solely on information generated by ChatGPT is unethical. Furthermore, feeding your research and data into the system can present ethical challenges related to transparency, privacy, and data security.

Can I use ChatGPT for a research paper?

In a research paper, ChatGPT can be utilized for idea generation and formulating concise statements. However, it is crucial to never rely on information generated by ChatGPT without proper fact-checking. Over-reliance on ChatGPT should be avoided. Additionally, it is important to be aware of the ethical challenges that may arise, such as addressing bias and discrimination, ensuring transparency, privacy protection, and accountability when inputting information into the system. To avoid these potential pitfalls, it is recommended to seek assistance from a professional editing service for writing and editing support rather than using ChatGPT.

Can professors tell when you use ChatGPT?

The progress of AI detection capabilities is keeping pace with AI text generation tools. Thus, there is a high chance that your professors can tell when you use ChatGPT. Even if your university currently lacks a robust detection system, it is common practice for universities to store student work on their servers for an extended period. Therefore, the possibility of detection remains even after the immediate submission.